TL;DR & Summary

Reading time: 4 minutes

You publish a page and leave indexing up to search engines, which isn’t wrong. Bing has instant indexing nowadays, and Google has its own tools (though it’s not instant).

But what’s wrong is people misunderstand indexing & question the righteousness of SEO.

Most of the time, pages don’t even get indexed. Nothing wrong with you, even the most elite domains face this.

Indexing is a process where search engines save a local copy of your page (as cache) in their multi-billion dollar data centers to serve it to its users. These guys don’t want to let unworthy pages occupy space on their servers.

By the end of this issue, here’s what you will understand well:

- Why is indexing so important (& expensive)?

- How to have a healthy indexing of your site?

- Using Indexing API for indexing pages

- Why your pages aren’t indexing?

The problem with indexing is, that it’s the beginning. Pages stored in the index are then organized based on the subject then the algorithm ranks all the stored pages based on several ranking factors. Making your pages get indexed won’t make the cut.

There’s a long journey you have to cruise after getting your pages indexed.

You can’t make the journey shorter, but you sure can make the journey a pleasing one.

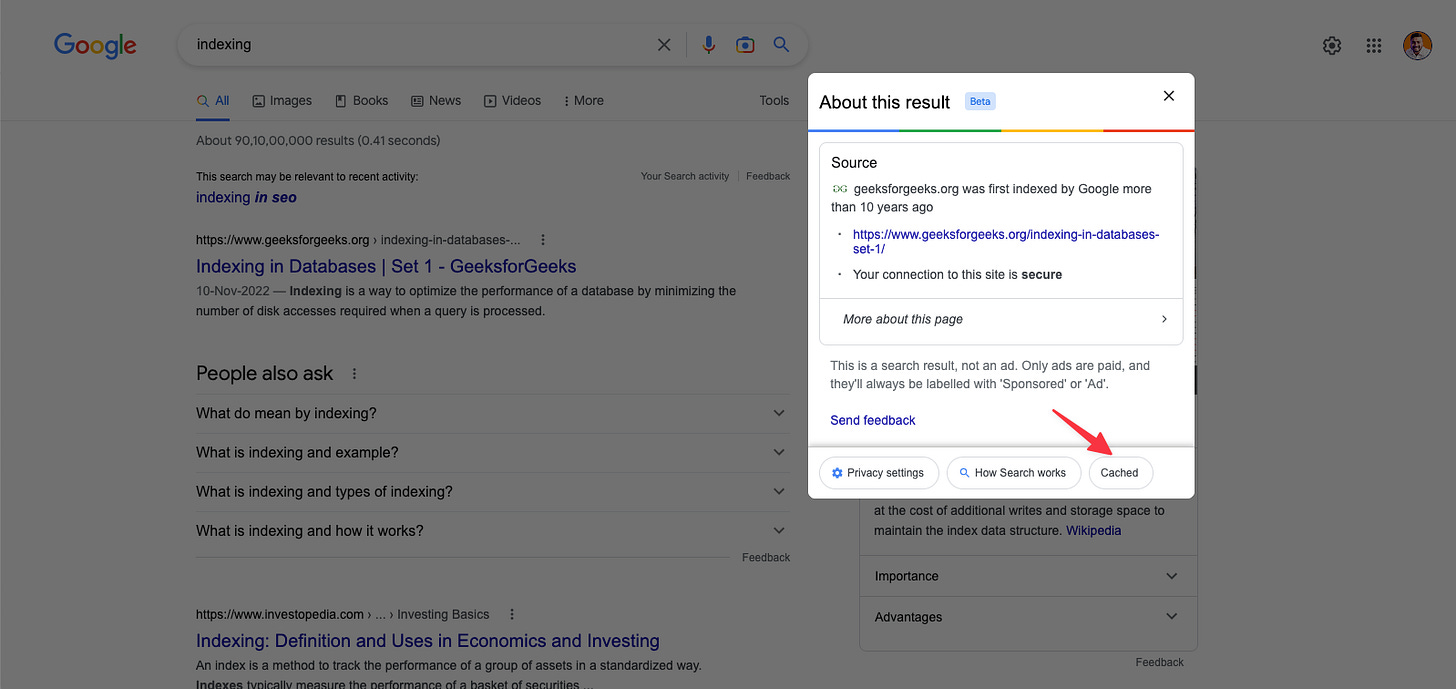

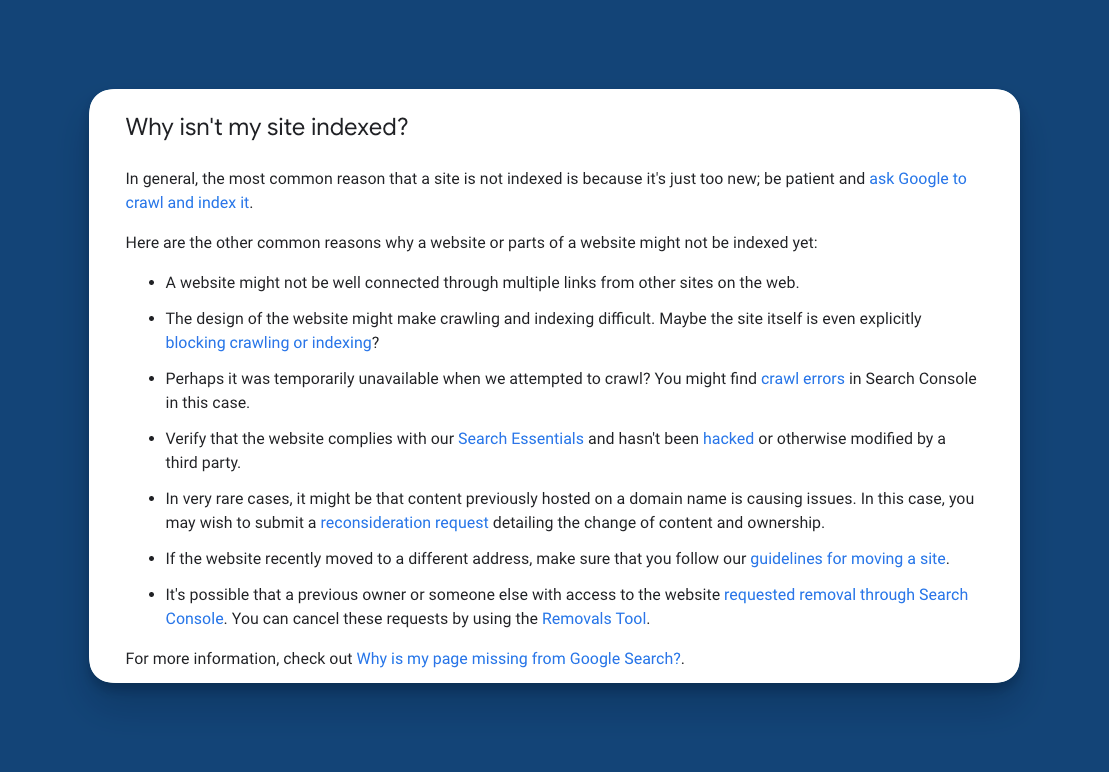

There are several reasons why your site might not get indexed, & I will quote Google’s official document, listing the most common reasons for a site, not indexing.

Making a page indexable is easy but getting it indexed is subjective.

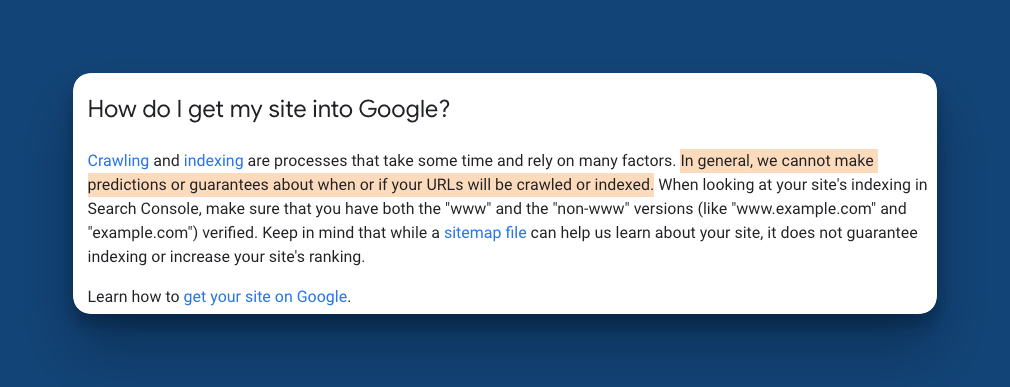

Google themselves don’t give any guarantees if a URL will ever get indexed, so if someone claims they can get your pages indexed, they’re outright lying.

Creating what search engines are looking for can increase the chance of search engines, crawling & indexing those pages.

Most site owners think that search engines will take care of indexing & they don’t need to pay any attention to the process.

No wonder so many people complain about their pages not indexing🤔

Indexing is a process, & here I will share how to ensure you’re pages are indexable, that is, search engines would love to have a copy of the pages you create.

But before that, let me share some advanced ways to have a healthy indexing of all pages on your site.

How to have a healthy indexing of your site?

Apart from requesting search engines to crawl your page, here are some ways to ensure search engines index your page:

- Use redirections with caution. If you have many domains (sub-domains or sub-folders), then define this with proper redirection protocols.

- Duplicate content is hard to avoid on the web. But you can manage it like a pro by understanding what search engines count as duplicate content.

- Don’t overthink about sub-domain or sub-folder. Search engines are fine with either of those.

- Don’t use cheap CDNs that are forwarding the users from one server to another in the name of CDN. These confuse the hell out of search engines

With that said, let me share 3 ways to help search engines index your pages responsibly.

Step 1: Canonicalize Pages for Better Organizing

Canonicalization is consolidating your pages logically. It simply avoids confusion for search engines that might affect poor, or worse, no indexing at all.

If you have a page that is accessible by many URLs, (eg. homepage for mobile & desktop users) then search engines see it as duplicate content.

If you don’t define the original page (or the page you want to be in the index), search engines will pick one by themselves. This may or may not be in your favor.

Step 2: Internally Link Newly Created Pages

I’ll never get tired of claiming this opinion, “Internal link is the most underrate on-page SEO technique.

If you’ve published a new page, internally link that page to a page that’s already indexed and ranking (irrespective of the rank).

Not sure if you know it already, but spammy sites link to credible pages just so that search engines would crawl their pages (yes! search engines can crawl backward as well) & might get indexed.

Step 3: No Broken Links

Broken links are a big turn-off for anyone, let alone search engines. There’s something called orphan pages, that don’t get backlinks from any page on the web. Search engines can never crawl that page.

Broken links might create a lot of orphan pages if those pages aren’t internally or externally linked.

How can you get a page to rank if it’s not crawled in the first place? To crawl, the page must be linking from at least one page that can (or has been) be crawled.

Bonus: Use Google’s Free Indexing API

If you have a large site with hundreds & thousands of pages, then Google’s free Indexing API can help you a lot. This API directly notifies Google whenever you add or remove pages from the domain of the API.

Using this API is complex & needs advanced technical skills to use it. Once set up, you don’t have to do anything. Every time any page gets updated, a new page goes live or removed, this will trigger the API & Google will be informed about this update.

If you’re looking forward to winning online, here’s how I can help:

- Sit with you 1-on-1 & create a content marketing strategy for your startup. Hire me for paid consulting.

- Write blogs, social posts, and emails for you. Get in touch here with queries (Please mention you found this email in the newsletter to get noticed quickly)

- Join my tribe on Twitter where I share SEO tips (every single day) & teaser of the next issue of Letters ByDavey.