TL;DR & Summary

Reading time: 5 minutes

In instant fruition times, people don’t want to want to wait for SEO to show its potential. That too with problems like indexing issues haunting right from day 1.

Most of the time, people don’t take the pain to fix minor issues that can make a huge impact on their organic traffic. Indexing is the level of clearance to unlimited organic traffic from search.

Speaking of Google alone, 7 billion searches happen each & every day. Even a fraction of that pie would place you in the top 1% in your industry.

With more and more people understanding & implementing best practices related to indexing, they’re able to leverage the evergreen traffic from search. It’s very easy to fix indexing for your site. It’s well within your reach & this issue will prove that to you.

Here are 3 simple steps to unlimited evergreen organic traffic by fixing indexing problems without breaking a sweat:

- Learn the search essentials by heart

- Understand the intent of the search algorithm

- Think like search engines

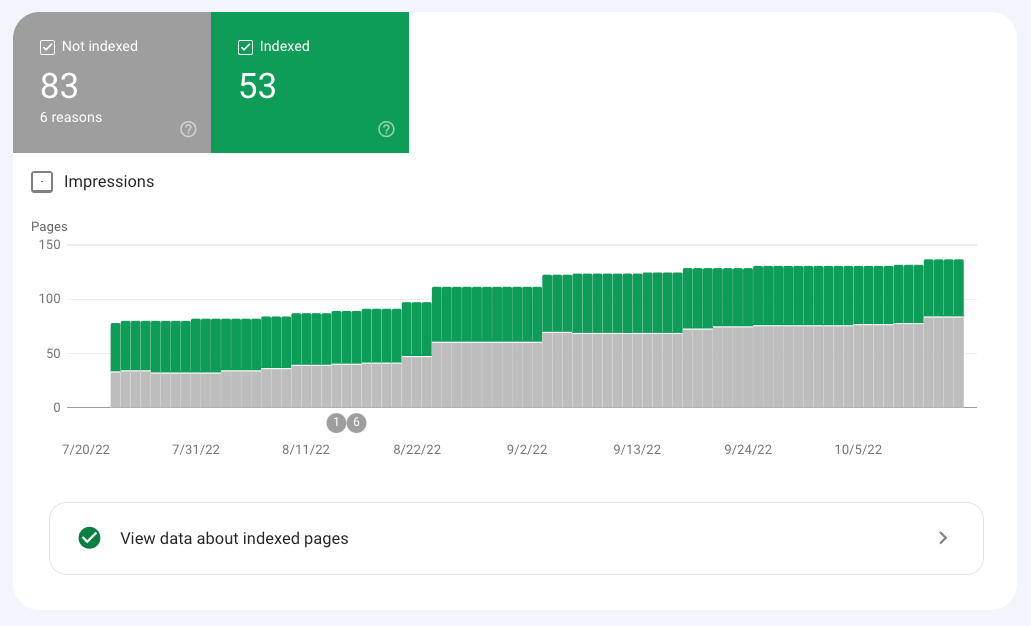

“Crawled, but not indexed” – does that sound familiar? For a site owner, there’s nothing more painful than seeing URLs under the “excluded” tab and labeled as Crawled, but not indexed. This sucks.

It’s common to see some URLs in that tab, even though I have it. However, you need to fix this occasionally or have someone do it for you. If you can’t, don’t be surprised if your site has little to no traffic.

You will end up wasting your time & have a sleepless night turning from left to right, thinking about what’s wrong with your site.

As and when you keep publishing more content, you will see URLs being populated here. There will be some or the other problem with the pages related to indexing. However, there’s a simple fix.

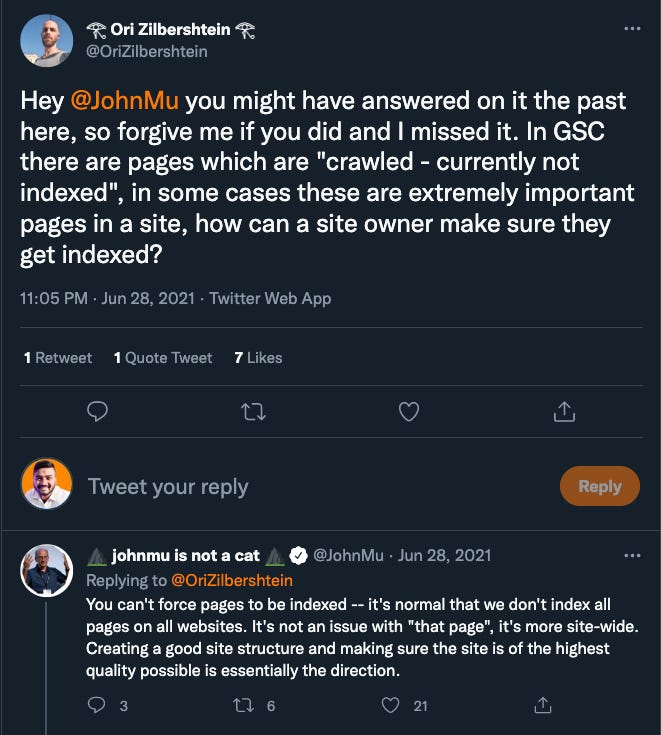

Usually, indexing issues are about the quality of the site. When you have indexing issues in specific URLs, the problem isn’t in the pages, it’s in the overall site. Here’s what John Mueller replied to a user asking a similar question.

What most people do is try all black-hat techniques to fix these issues, without referring to official documentation or consulting with professionals. What’s missing are simple actionable steps. Let me introduce the mistakes that cause indexing issues, then we will talk about the solution.

Shall we?

Why do indexing issues happen in the first place?

Before talking about the operation, you need to diagnose the problem. This will help you pinpoint the problem & hence the solution. Here are some reasons your site has indexing issues, which is why you don’t the traffic you think you should have.

- You might have added a different domain (with a sub-domain) to the search console than what’s on the web. See if your site loads with the ‘www’ subdomain on the web browser & a non-www version are added in GSC.

- You might have missed creating, let alone submitting a sitemap to GSC. Sitemaps are how search engines discover your site. However, keep in mind that Google has hit the bottleneck because the search engine has trillions of pages in its index & it’s facing a huge problem with efficiency considering the number of results the algorithm has to rank. It’s natural that it will impose indexing stringency to keep up with the quality.

- Most of the pages that are showing up in my GSC as crawled but not indexed are the page I don’t want to show up in search. That’s okay, but there’s a better solution. Tagging those pages as no-index will reduce the number from GSC. It feels good that the numbers have gone down.

- Duplicate content is another reason why your site might have indexing issues. Remember John said that when certain indexing URLs have indexing issues, it’s usually because of the site and not the URL itself. Just like duplicate content, even thin content plays a major role in indexing issues. When your pages don’t have enough content for search engines to understand the context.

Now that you have the context of what could cause indexing problems, let’s talk about solutions.

Step 1: Read the webmaster guidelines (now search essentials) at least once

The most common reason why 9 out of 10 sites have Indexing issues is the quality of the site. By quality I mean helpful content that users are already searching for, if there’s little to no search volume about the topic (not keywords, topics) Google won’t feel the need to index. It takes physical locations worth billions to store the indexed pages.

Webmaster guidelines will put you in the right direction in terms of creating a high-quality site that is helpful for its users. Google can read & understand the content. You can’t fool it anymore.

Please stop wasting time on hacking the algorithm & make friends with it.

Step 2: Understand the intent of the algorithm

Google’s automated crawling-indexing-ranking system is obsessed with providing relevant results to its users. What’s obvious to you might mean thousands of dollars worth of information.

Are you aware of the seriousness your audience has about the information you’ve gathered? Are you aware of the circumstances your audience searches on Google?

You & search algorithm are on the same side, maybe you don’t realize it. You both have the same objectives. Yours is to create pages with helpful information & Google’s is to put that information in front of the relevant users.

Step 3: Think like search engines (& don’t beat around the bush)

Again, let’s circle back to quality issues with your site.

When you create a site to fool a piece of code, you’re trying to fool a system that’s designed to replicate you. As part of fooling, avoid inserting hidden text that only search engines can read.

Because it can be read only by the search engines (it’s embedded inside the code or made the font have the same color as the background) it’s easy for search engines to flag it as spam.

Another thing that can be avoided is stuffing keywords & don’t, please don’t use meta-keywords. Google (& all major search engines) stopped using meta-keywords tag over a decade ago.

Link exchange is yet another way search engines can find out that the quality of the site isn’t up to the mark. When you indulge in link exchanges, the quality of the link is questionable.

The links that are worthy, won’t require an exchange. There’s no way to tell if there’s a link reciprocal that has happened. I hate reaching out for links, I think it’s best to put the time & energy into creating link-worthy content than wasting to beg for links.

Pro tip: Look for the backlinks your site is getting. Sometimes spammers, link out to high authority sites just to get their pages crawled by the search engines. Such links can also pose a problem in terms of indexing. Most of the time, these links will be highly irrelevant or worse, spammy/unethical.

If you’re looking forward to winning online, here’s how I can help:

- Sit with you 1-on-1 & create a content marketing strategy for your startup. Hire me for paid consulting.

- Write blogs, social posts, and emails for you. Get in touch here with queries (Please mention you found this email in the newsletter to get noticed quickly)

- Join my tribe on Twitter where I share SEO tips (every single day) & teaser of the next issue of Letters ByDavey