Remember the few dollars you saved on web hosting? Yeah, that might be causing crawling issues. Because that cheap server isn’t able to keep up with the crawl requests. Or is it something else? Let’s explore.

By the end of this issue, you will be able to:

- Verify if your website’s invisibility is because of crawling issues

- Be aware of major crawling issues resulting in low-traffic

- Identify the crawling issues you can safely ignore

You’re trying to get traffic to your site, but there are a lot of things that should go right for that to happen. To index your pages properly, search engines should be able to crawl them first. Failing to do that, your customers won’t be able to find your pages.

While crawling is highly important, it is also crucial to understand what you shouldn’t take seriously. Here are 3 warnings/errors related to crawling you can ignore.

Table of Contents

Step 1: Noindex tags

Noindex tags instruct crawlers that the pages tagged as noindex shouldn’t be indexed by search engines. You can’t stop crawlers from crawling your pages, this is to find links that you want to get indexed. Reports related to noindex can be found in the Google search console.

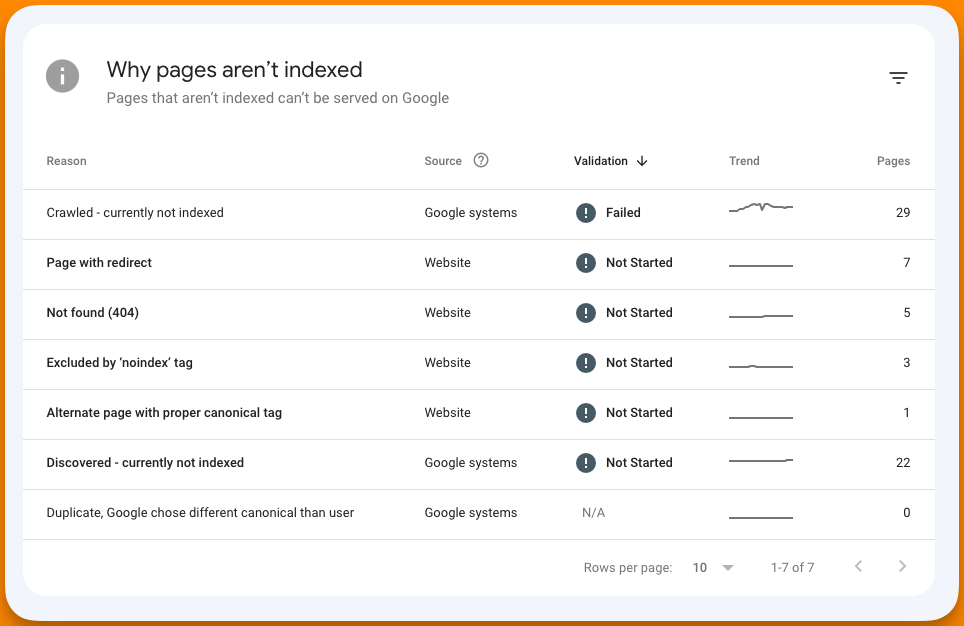

Find the reports under Indexing > Pages > Page Indexing and the report looks as shown below.

Most crawling warnings in Google Search Console can be ignored as most of the warnings aren’t actual issues. While the topic of noindex is highly subjective, when you dig deeper you’ll understand that you can ignore certain warnings.

I’ll make it simpler for you to decide if a noindex warning can be ignored. There are majorly 3 types of noindex pages you can ignore:

- Temporary pages: Any landing page or pages for testing that you might have tagged as noindex will show in the warning report. For the system, it’s a noindex but you know that it’s okay to not have those pages indexed.

- Private pages: Every site will have certain pages that aren’t meant for public consumption, these can be staging sites, internal tools, admin log-in pages, etc. These pages show up in noindex reports which are safe to ignore.

- User-generated content: Most user-generated content on entities like forums and comments are marked as noindex to maintain privacy & avoid spam. Unless you’re Reddit, you don’t want comments and UGC content from forums on search engines, do you?

All other warnings related to noindex should be taken seriously, seek professional help if needed.

Step 2: Thin meta descriptions

Since Google has started showing custom meta descriptions, many completely ignore it and most don’t even know that Google rewrites meta descriptions to match the search query. Here’s why Google rewrites the meta descriptions.

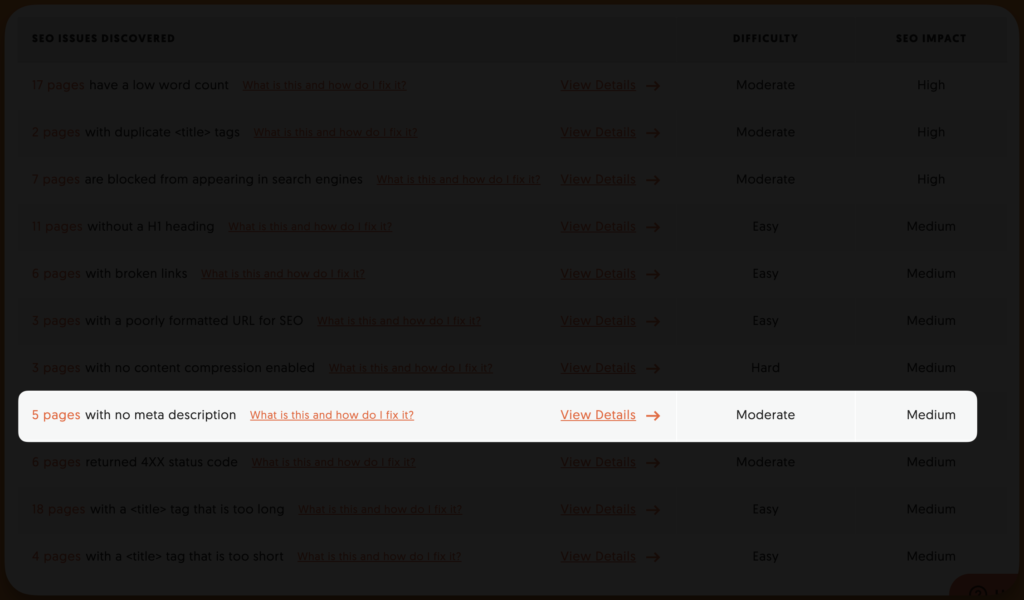

All keyword research tools offer a site audit feature, I’ve personally used the site audit feature present in Ubersuggest and Semrush. These site audit features look for pages with thin meta descriptions.

Any tool suggesting thin meta description wants you to work on the pages that have the potential to rank for target keywords. The meta description is one of the prominent positions where search engines look for hints to understand what the page is about.

This is what a site audit looks like in Ubersuggest.

A thumb rule is to ignore pages with thin meta descriptions that are temporary (internal pages) in nature, user-generated, or high on rich snippets page, for example, product pages for ecommerce where meta descriptions contain review count & ratings-related details.

Step 3: Missing alt-text

Just by using alt text, I boosted the on-page SEO score for one of my ex-clients just enough to claim the #1 spot on SERPs. Almost every single image was missing alt text, I added relevant details and requested indexing – that’s all that was required to boost traffic, both internal & from search.

However, alt text isn’t always necessary. Here are some genuine scenarios where you can ignore alt-text warnings.

- Decorative Images: When an image is purely decorative and adds no meaningful content or context to the page, there’s usually no need for alt text. Alt text is intended to provide information to users who can’t see the image, and for decorative images, it serves no purpose.

- Images on Non-Public Pages: If an image is placed on a non-public page or behind a login wall where search engines can’t access or index the content, providing alt text may not be necessary. Search engines can’t crawl and rank content they can’t access.

- Image Replacement with CSS: Some web designers use CSS techniques to replace standard HTML elements with images. In such cases, the images are intended solely for visual styling, and the actual content is in the HTML, making alt text redundant.

Images often play a crucial role in conveying information on websites, so ensuring they have descriptive alt text is a standard SEO best practice.

Bonus: Meta keywords

All major search engines like Google have stopped considering meta keywords on the page. Thanks to smart SEOs who started stuffing keywords in meta keywords that hid in plain sight in HTML code.

This gave SEO help that the page needed to rank #1. So if an audit or an SEO says meta keywords are missing, you can & should ignore it.

Video Guide

While we’re talking about crawling, one topic that goes without mentioning is the crawl budget. Here’s a podcast that you should listen to, if you have any questions related to crawl budget.

If you never knew something like a crawl budget existed, you should definitely listen to this. There’s nothing else that you need.

Today’s action steps →

- Check the crawling report in the Google search console

- Spend some time seeing and analyzing reports & warnings that can be ignored. In this process, you will find warnings that need your attention.

- Do a site audit using tools like Ubersuggest, Semrush, or Ahrefs. Get in touch if you need professional help

SEO this week

- What’s new with structured data and Google search rankings? [Video]

- Now you can merge two types of structured data, Google confirms

- Google SERPs no longer show rich snippets for events

- Are you writing unhelpful content? Google share hints

- Google released new tools to learn more about images in the search

Masters of SEO

- Have an internal team of SEOs? Here’s how to train them for the future

- Worst SEO horror stories for 2023

- How to avoid keyword cannibalization?

- How SEOs can collaborate with developers?

- Own a Shopify store? Here’s how to do SEO for the store in 2023

How can I help you?

I put a lot of effort into coming up with a single edition of this newsletter. I want to help you in every possible way. But I can do only so much by myself. I want you to tell me what you need help with. You can get in touch with me on LinkedIn, Twitter, or email to share your thoughts & questions that you want to be addressed. I’d be more than happy to help.

Whenever you’re ready to dominate SERPs, here’s how I can help:

- Sit with you 1-on-1 & create a content marketing strategy for your startup. Hire me for consulting

- Write blogs, social posts, and emails for you. Get in touch here with queries (Please mention you found this email in the newsletter to get noticed quickly)

- Join my tribe on Twitter & LinkedIn where I share SEO tips (every single day) & teaser of the next issue of Letters ByDavey