Dealing with search engine issues? Could be possible that robots.txt might have some problems. If you don’t already know, unnecessary crawling might lead to server loads which slows down your site. A small file is that critical.

By the end of this issue, you will be able to:

- Understand the role of robots.txt for your website

- Start using robots file for controlled crawling

- Be aware of the mistakes to avoid

The robots.txt files don’t have to be a boring wall of code. See what Nike has done with their robots.txt file

As easy as it is to start a website, maintaining isn’t that easy. There’s content that needs to be actionable, there’s UX that should be friendly, there’s SEO and then there’s “setup and forget” settings most non-SEO founders ignore robots.txt files. Some don’t even have it, especially those who don’t use WordPress.

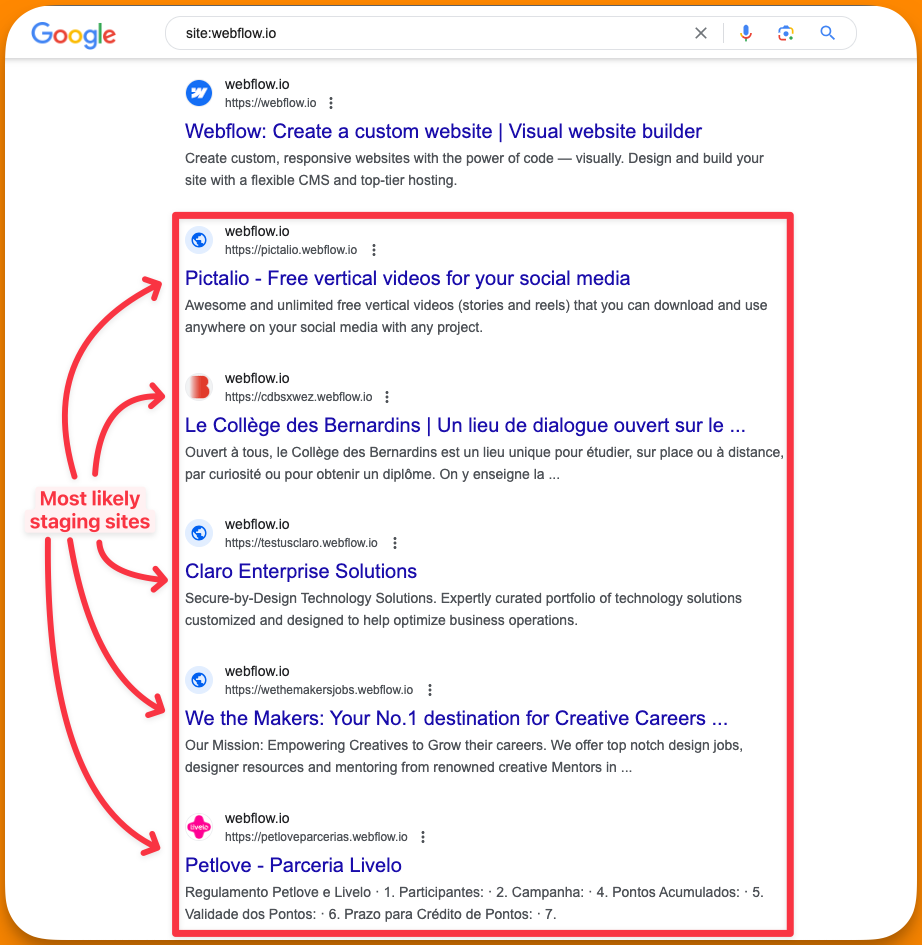

Many founders struggle to hide what shouldn’t show up on search engines (like staging sites). This is where robots.txt files come in handy.

Unwanted indexing can lead to dilution of SEO traffic which is bad for you and your users as well. With unwanted pages excluded from crawling, you can reduce the server load.

As your site grows, you might need to make changes to the robots.txt file for your site. Most sites will never need to make any changes to their robots.txt file.

For most startup founders, a robots.txt file is one of the few “set and forget” things when it comes to SEO.

Before you can control crawl efficiency, know what a small file in your root folder can do.

Here’s what robots file can do for your website:

- Handling duplicate content

- Facilitating Testing Environments

- Prioritizing crawling pages of your site

- Can block crawlers from crawling your site

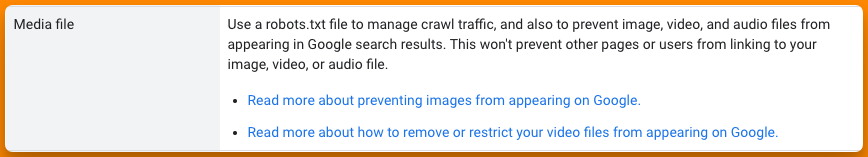

- You can block media files from appearing in the search

Google says ”Robots.txt is not a mechanism for keeping a web page out of Google”. Confusing right? It’s easy to go wrong. I’ve gathered the top 3 mistakes you should avoid if you’re creating a robots.txt file for the first time.

Table of Contents

Step 1: No sitemaps in the robots.txt file

If you want to rank your pages that ‘should’ rank you need to specify the pages that shouldn’t rank. This is probably the most “SEO” thing you will get to do with the robots.txt file.

When you include your sitemap in the robots.txt file you can leverage the following benefits:

- Precise crawling: Robots.txt is one of the first files crawlers look for when visiting your site. When you include sitemaps (which is also one of the first files crawlers look for), you can help crawlers effectively identify sitemaps and prioritize the content accordingly.

- Better visibility: Sitemaps inform crawlers about the new pages & updates on the already published ones (lastmod data). By including this detail in the robots file, you ensure crawlers don’t miss it.

- Effective updated content: When any of your pages are updated, it’s important for those updates to reflect on SERPs as soon as possible. Web crawlers can find instructions for crawling and the updates to crawl in one place.

Bonus: If you have submitted your sitemap to the Google search console, there’s no need to add sitemaps in robots.txt for Google again. However, since robots.txt is a publically available file (search for yourdomainname.com/robots.txt) any search engine can crawl that file and discover your sitemap.

This increases the chances of showing up in front of users who use search engines other than Google. Unless you specifically want to, you don’t need to submit your sitemaps to other search engines separately. Lastly, you can include as many sitemaps in your robots.txt file.

Read more about XML sitemaps here

Step 2: Including noindex tags in the robots file

As of September 1, 2019, Google has started ignoring noindex tags in robots file. So as part of the instructions, if you are still including noindex tags in the robots.txt file, you should remove them. The pages that you don’t want to show up on SERPs might actually be showing up. You should add a noindex tag in the <head> section of the individual page that you don’t want to get indexed. Blocking crawlers from indexing doesn’t mean they won’t crawl. There are variations you should know about when dealing with no-indexing pages.

- Block all crawlers from indexing using <meta name=”robots” content=”noindex”>

- Block Google from indexing using <meta name=”googlebot” content=”noindex”>

- Block Bing from indexing using <meta name=”bingbot” content=”noindex”>

- Block crawlers from indexing and not follow links on the page using <meta name=”robots” content=”noindex,follow”>

- Block crawlers from indexing but follow links on the page using <meta name=”robots” content=”noindex,nofollow”>

Note:

- You can replace “robots” with any crawling agent for 4 & 5

- Learn more about all the major search engines and their crawling agents here

- If you’re a WordPress user, almost all SEO plugins have features for individual pages to add noindex tags

Step 3: Poor use of wildcards

Crawlers understand only two wildcards mentioned in the robots.txt file, it’s * (asterisk) & $ (dollar). Both have different usecases. However, as simple as this sounds, it can get complicated to use these wildcards while preparing rules for the crawlers. Here are some bad ways to use wildcards in robots.txt file

- Overusing wildcards: Using too many wildcards can lead to confusion and may result in blocking more content than intended. For example,

Disallow: /*/*/*might seem like it’s blocking a specific pattern, but it can end up blocking a large portion of the website. - Misplaced Wildcard: Placing the wildcard character (

*) at the wrong place can lead to unintended consequences. For example,Disallow: /*.html$is intended to block all.htmlfiles, but if mistakenly written asDisallow: /*html$, it would block any URL that ends with ‘html’, not just.htmlfiles - Unnecessary Use of Wildcards: Sometimes, wildcards are used when they’re not needed, which can lead to confusion. For example,

Disallow: /parent/*andDisallow: /parent/have the same effect according to the original robots.txt specification. The wildcard in the first rule is unnecessary. - Incorrect Assumption of RegEx Support: The robots.txt file does not support full regular expression syntax. But Google & Bing does recognize some RegEx patterns mentioned in the file. For example, using something like

Disallow: /parent/[0-9]*to block any URL that starts with ‘/parent/’ followed by any number will not work as expected.

Video Guide

I clearly don’t need to talk much about what you’ll find in the video I’ve attached. Enjoy.

Today’s action steps →

- Find your robots.txt file and see if you need to update it. You might need to block a thankless crawler 😉

- If you don’t already have a robots file, create one from scratch using this official guide

SEO this week

- Google has dropped the favicon crawling agent. Googlebot-Image must be allowed

- Google now supports structured data for paywalled content

- Google is testing Discover Feed on its valuable desktop home page

- Bing explains what AI means in search

- LLMs can now pick user preferences much more accurately

Masters of SEO

- Search generative experience and Retrieval augmented generation as the future

- How is SGE treating Black Friday & Cyber Monday snapshots?

- A few words on seasonality in SEO

- Why are so many people talking about indexing issues? Short answer – Priority Systems

- Kevin Indig takes a dig at core updates from Google

How can I help you?

I put a lot of effort into coming up with a single edition of this newsletter. I want to help you in every possible way. But I can do only so much by myself. I want you to tell me what you need help with. You can get in touch with me on LinkedIn, Twitter, or email to share your thoughts & questions that you want to be addressed. I’d be more than happy to help.

Whenever you’re ready to dominate SERPs, here’s how I can help:

- Sit with you 1-on-1 & create a content marketing strategy for your startup. Hire me for consulting

- Write blogs, social posts, and emails for you. Get in touch here with queries (Please mention you found this email in the newsletter to get noticed quickly)

- Join my tribe on Twitter & LinkedIn where I share SEO tips (every single day) & teaser of the next issue of Letters ByDavey